Bash 101: Cross-platform command shell with scripting

While I love powershell, it isn't the best solution for every problem. We were thinking about implementing Powershell automation scripts for our windows hosts, but then decided that maintaining 2 sets of test scripts (*NIX / Windows) would be impractical given the number of scripts we need to write & maintain

We settled on creating just one script per test case. To make that happen we had to turn to Bash.

This article aims to:

- Briefly explain what bash is

- Introduce a couple of basic concepts

Notes:

- Cygwin Install Article

- GNU Bash information

- Bash Reference Manual

- Bash (Wikipedia Page)

The group I'm currently in has to deal with all versions of windows made in the last 10 years + HPUX-PPA, HPUX-IA, Solaris (Sparc), Linux, AIX, etc... Given the disparity in manufacturers, chip architectures and OS it was difficult to find a least-common-denominator scripting system that works across all of these platforms.

Enter Bash. Given its open source nature, versions exist for all the platforms we need to test on. It is a capable shell environment with a LOT of options that make it easy for us to maintain our test scripts.

Getting Started

First, make sure that you have Bash installed on your system. If you are running windows, Ensure that Cygwin is installed and that you have pulled up the Cygwin Terminal.

Next, Open up a text editor and save a new blank file named test.sh.

Important note for windows users: Use Notepad++ or a similar tool to ensure that your line endings are of the UNIX type (LF) rather than windows (CRLF). If you don't Bash will choke on the scripts and you'll get funny errors

Save the following text to test.sh:

#!/bin/bash

echo "That was easy"

Note: While the first line looks like a comment, it is not. The #! tells the system where the bash shell is installed. If bash is in a non-standard location, you will need to specify it here.

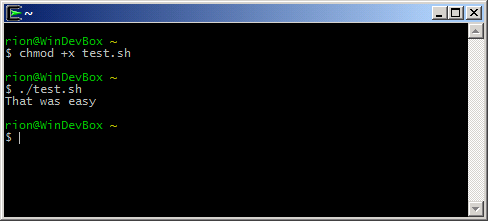

Once saved, you need to make the file executable. To do this:

- Navigate to the file in the Bash (Cygwin) shell

- Run this command: chmod +x test.sh

Now that the file is executable, you can run like this: ./test.sh

Note: The ./ (dot-slash) before the filename is REQUIRED. This tells the bash shell to execute the named file in the current directory.

If all goes well, you should see That was easy printed on your console screen. Here's a screen-cap showing the output of chmod and ./test.sh:

Creating a more complex script

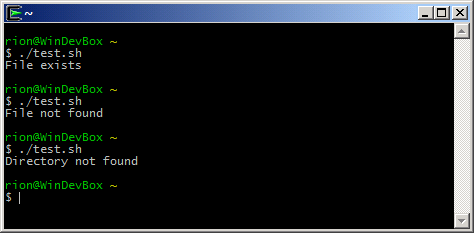

Ok, now that you know the basics lets write a script that:

- Checks if a folder exists

- If so, check for a file in that folder

- If that all checks out, it prings "file exists". If not, it prints either "Directory not found" or "File not found"

For this to work, you will need to create a folder in your home directory named folder and inside it have a file named file1.txt:

#!/bin/bash

#

# This is a test script. It will see if ~/folder/file.txt exists

if [ -d ~/folder ]

then

if [ -f ~/folder/file.txt ]

then

echo "File exists"

else

echo "File not found"

fi

else

echo "Directory not found"

fi

Here's what's going on:

- The lines beginning with # (hash / number sign / pound sign) are all comments (except for the first one which we explained above).

- If-then-else statements work like this:

if [ boolean condition ]

then

Do Something

else

Do Something Else

fi

Whatever is between the brackets is evaluated. Depending on the result, the 'then' or 'else' clauses are executed. the 'fi' command finishes the statement - We use a nested if-then-else that first checks for the existance of a directory (with a -d operator) then checks for the existance of a folder ( -f ).

- Once the conditionals are evaluated one of the echo commands is executed. echo will toss a string to the Standard Output, making it visible at the command prompt.

Here is a screen-cap of the script results for the three scenarios:

For more information about Bash, see the reference material in the Notes section at the top of the screen. Bash is proving to be a powerful tool for system-agnostic scripts. It will be interesting to see if I run into any limitations moving forward with our multi-environment setup.